针对 2048 游戏,实现一个 AI 程序,当用户选择提示功能时,系统根据当前局势找出适当的决策帮助用户赢得游戏。系统界面实现由 Android 完成,主体 AI 使用了传统的博弈树模型中常用的算法,即 Minimax 和 Alpha-beta 剪枝,重点落在启发函数的设计上。项目的启发函数从四个方面评估当前的局势,结合迭代深搜,在限定搜索时间内做出决策,赢得游戏的概率高于 90%。

2048AI设计与实现

项目简介

2048 游戏简介

2048 是一款比较流行的数字游戏,最早于 2014 年 3 月 20 日发行。原版 2048 首先在 GitHub 上发布,原作者是 Gabriele Cirulli,后被移植到各个平台。

游戏规则:

每次可以选择上下左右其中一个方向去滑动,每滑动一次,所有的数字方块都会往滑动的方向靠拢外,系统也会在空白的地方乱数出现一个数字方块,相同数字的方块在靠拢、相撞时会相加。不断的叠加最终拼凑出 2048 这个数字就算成功。

2048 智能提示实现

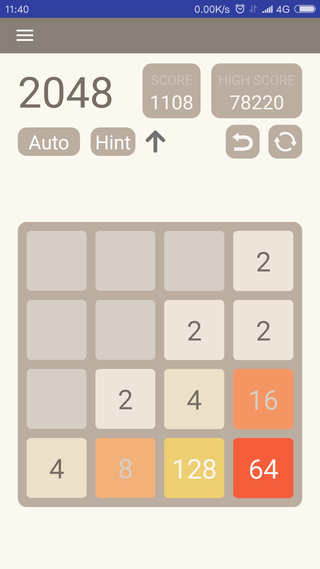

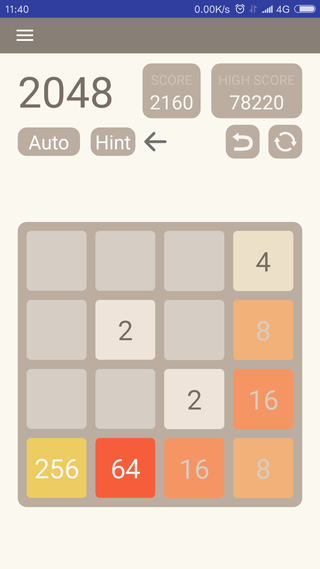

系统 AI 提供提示功能,从当前格局出发,寻找所能达到搜索层次的最优的解

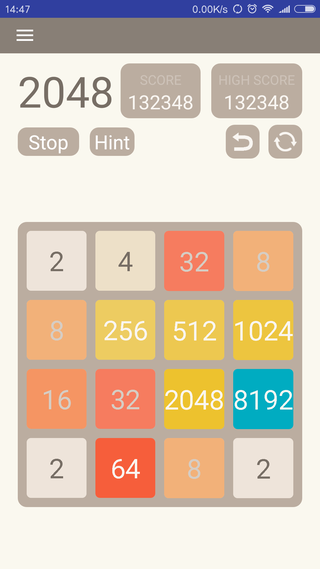

点击 Hint 按钮,显示滑动提示

建模

Since the game is a discrete state space, perfect information, turn-based game like chess and checkers, I used the same methods that have been proven to work on those games, namely minimax search with alpha-beta pruning . Since there is already a lot of info on that algorithm out there, I'll just talk about the two main heuristics that I use in the static evaluation function and which formalize many of the intuitions that other people have expressed here.

2048 本质上可以抽象成信息对称双人对弈模型(玩家向四个方向中的一个移动,然后计算机在某个空格中填入 2 或 4 )。这里“信息对称”是指在任一时刻对弈双方对格局的信息完全一致,移动策略仅依赖对接下来格局的推理。作者使用的核心算法为对弈模型中常用的带 Alpha-beta 剪枝的 Minimax。这个算法也常被用于如国际象棋等信息对称对弈 AI 中。

算法分析

http://blog.codinglabs.org/articles/2048-ai-analysis.html

算法实现

格局评估指标

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 private double evaluate () { double smoothWeight = 0.1 , monoWeight = 1.3 , emptyWeight = 2.7 , maxWeight = 1.0 ; return grid.smoothness() * smoothWeight + grid.monotonicity() * monoWeight + Math.log(getEmptyNum(grid.getCellMatrix())) * emptyWeight + grid.maxValue() * maxWeight; }

采用线性函数,并添加权重系数,前 3 项指标能衡量一个局面的好坏,而最大数该项,则让游戏 AI 多了一点积极和"冒险"。

Minimax Search 和 Alpha Beta Pruning 的实现

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 52 53 54 55 56 57 58 59 60 61 62 63 64 65 66 67 68 69 70 71 72 73 74 75 76 77 78 79 80 81 82 83 84 85 86 87 88 89 90 91 92 93 94 95 96 97 98 99 100 101 102 103 104 105 106 107 108 109 110 111 112 113 private SearchResult search (int depth, double alpha, double beta, int positions, int cutoffs) { double bestScore; int bestMove = -1 ; SearchResult result = new SearchResult (); int [] directions = {0 , 1 , 2 , 3 }; if (this .grid.playerTurn) { bestScore = alpha; for (int direction : directions) { GameState newGrid = new GameState (this .grid.getCellMatrix()); if (newGrid.move(direction)) { positions++; AI newAI = new AI (newGrid); newAI.grid.playerTurn = false ; if (depth == 0 ) { result.move = direction; result.score = newAI.evaluate(); } else { result = newAI.search(depth - 1 , bestScore, beta, positions, cutoffs); if (result.score > 9900 ) { result.score--; } positions = result.positions; cutoffs = result.cutoffs; } if (result.score > bestScore) { bestScore = result.score; bestMove = direction; } if (bestScore > beta) { cutoffs++; return new SearchResult (bestMove, beta, positions, cutoffs); } } } } else { bestScore = beta; List<Candidate> candidates = new ArrayList <>(); List<int []> cells = this .grid.getAvailableCells(); int [] fill = {2 , 4 }; List<Double> scores_2 = new ArrayList <>(); List<Double> scores_4 = new ArrayList <>(); for (int value : fill) { for (int i = 0 ; i < cells.size(); i++) { this .grid.insertTitle(cells.get(i)[0 ], cells.get(i)[1 ], value); if (value == 2 ) scores_2.add(i, -this .grid.smoothness() + this .grid.islands()); if (value == 4 ) scores_4.add(i, -this .grid.smoothness() + this .grid.islands()); this .grid.removeTile(cells.get(i)[0 ], cells.get(i)[1 ]); } } double maxScore = Math.max(Collections.max(scores_2), Collections.max(scores_4)); for (int value : fill) { if (value == 2 ) { for (Double fitness : scores_2) { if (fitness == maxScore) { int index = scores_2.indexOf(fitness); candidates.add(new Candidate (cells.get(index)[0 ], cells.get(index)[1 ], value)); } } } if (value == 4 ) { for (Double fitness : scores_4) { if (fitness == maxScore) { int index = scores_4.indexOf(fitness); candidates.add(new Candidate (cells.get(index)[0 ], cells.get(index)[1 ], value)); } } } } for (int i = 0 ; i < candidates.size(); i++) { int pos_x = candidates.get(i).x; int pos_y = candidates.get(i).y; int value = candidates.get(i).value; GameState newGrid = new GameState (this .grid.getCellMatrix()); newGrid.insertTitle(pos_x, pos_y, value); positions++; AI newAI = new AI (newGrid); newAI.grid.playerTurn = true ; result = newAI.search(depth, alpha, bestScore, positions, cutoffs); positions = result.positions; cutoffs = result.cutoffs; if (result.score < bestScore) { bestScore = result.score; } if (bestScore < alpha) { cutoffs++; return new SearchResult (-1 , alpha, positions, cutoffs); } } } return new SearchResult (bestMove, bestScore, positions, cutoffs); }

己方格局评价函数实现

平滑性

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 public double smoothness () { int smoothness = 0 ; for (int x = 0 ; x < 4 ; x++) { for (int y = 0 ; y < 4 ; y++) { if (this .cellMatrix[x][y] != 0 ) { double value = Math.log(this .cellMatrix[x][y]) / Math.log(2 ); for (int direction = 1 ; direction <= 2 ; direction++) { int [] vector = this .vectors[direction]; int cnt_x = x, cnt_y = y; do { cnt_x += vector[0 ]; cnt_y += vector[1 ]; } while (isInBounds(cnt_x, cnt_y) && isCellAvailable(cnt_x, cnt_y)); if (isInBounds(cnt_x, cnt_y)) { if (cellMatrix[cnt_x][cnt_y] != 0 ) { double targetValue = Math.log(cellMatrix[cnt_x][cnt_y]) / Math.log(2 ); smoothness -= Math.abs(value - targetValue); } } } } } } return smoothness; }

单调性

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 42 43 44 45 46 47 48 49 50 51 public double monotonicity () { int [] totals = {0 , 0 , 0 , 0 }; for (int x = 0 ; x < 4 ; x++) { int current = 0 ; int next = current + 1 ; while (next < 4 ) { while (next < 4 && this .cellMatrix[x][next] == 0 ) next++; if (next >= 4 ) next--; double currentValue = (this .cellMatrix[x][current] != 0 ) ? Math.log(this .cellMatrix[x][current]) / Math.log(2 ) : 0 ; double nextValue = (this .cellMatrix[x][next] != 0 ) ? Math.log(this .cellMatrix[x][next]) / Math.log(2 ) : 0 ; if (currentValue > nextValue) { totals[0 ] += nextValue - currentValue; } else if (nextValue > currentValue) { totals[1 ] += currentValue - nextValue; } current = next; next++; } } for (int y = 0 ; y < 4 ; y++) { int current = 0 ; int next = current + 1 ; while (next < 4 ) { while (next < 4 && this .cellMatrix[next][y] == 0 ) next++; if (next >= 4 ) next--; double currentValue = (this .cellMatrix[current][y] != 0 ) ? Math.log(this .cellMatrix[current][y]) / Math.log(2 ) : 0 ; double nextValue = (this .cellMatrix[next][y] != 0 ) ? Math.log(this .cellMatrix[next][y]) / Math.log(2 ) : 0 ; if (currentValue > nextValue) { totals[2 ] += nextValue - currentValue; } else if (nextValue > currentValue) { totals[3 ] += currentValue - nextValue; } current = next; next++; } } return Math.max(totals[0 ], totals[1 ]) + Math.max(totals[2 ], totals[3 ]); }

空格数

1 2 3 4 5 6 7 private int getEmptyNum (int [][] matrix) { int sum = 0 ; for (int i = 0 ; i < matrix.length; i++) for (int j = 0 ; j < matrix[0 ].length; j++) if (matrix[i][j] == 0 ) sum++; return sum; }

最大数

1 2 3 4 5 6 7 8 public double maxValue () { return Math.log(ArrayUtil.getMax(cellMatrix)) / Math.log(2 ); }

对方格局评价函数实现

对方对于格局的目标就是使得连通个数变多并且使得平滑性降低,表现效果就是使得格局趋于散乱,让玩家难以合并相同的数字。

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 public int islands () { int islands = 0 ; marked = new boolean [4 ][4 ]; for (int x = 0 ; x < 4 ; x++) { for (int y = 0 ; y < 4 ; y++) { if (this .cellMatrix[x][y] != 0 ) { this .marked[x][y] = false ; } } } for (int x = 0 ; x < 4 ; x++) { for (int y = 0 ; y < 4 ; y++) { if (this .cellMatrix[x][y] != 0 && !this .marked[x][y]) { islands++; mark(x, y, this .cellMatrix[x][y]); } } } return islands; } private void mark (int x, int y, int value) { if (x >= 0 && x <= 3 && y >= 0 && y <= 3 && (this .cellMatrix[x][y] != 0 ) && (this .cellMatrix[x][y] == value) && (!this .marked[x][y])) { this .marked[x][y] = true ; for (int direction = 0 ; direction < 4 ; direction++) { int [] vector = this .vectors[direction]; mark(x + vector[0 ], y + vector[1 ], value); } } }

迭代深搜

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 public int getBestMove () { return this .iterativeDeep(100 ); } private int iterativeDeep (long minSearchTime) { long start = new Date ().getTime(); int depth = 0 ; int best = -1 ; do { SearchResult newBest = this .search(depth, -10000 , 10000 , 0 , 0 ); if (newBest.move == -1 ) break ; else best = newBest.move; depth++; } while (new Date ().getTime() - start < minSearchTime); return best; }

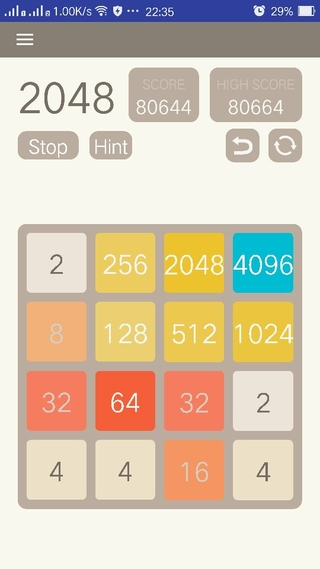

运行效果

自动运行结果

结论

游戏 AI 的决策过程,是标准的 Minimax Search 结合 Alpha Beta Pruning 的实现。 所有的方向(上下左右)都会去尝试。然而在对手做决策时,不是每个空格都去尝试填 2 或 4 。而是选择了最坏的局面,做为搜索分支的剪枝条件,选择性地丢弃了很多搜索分支。对于选择性忽略搜索节点,在某些情况下,会失去获取最优解的机会。不过砍掉了很多分支后, 其搜索深度大大加强,生存能力更强大。另外,超时判断在每个深度探索结束后进行,这未必会精确,甚至误差很大,但不管如何游戏 AI 基本达到了每 100ms 决策一步的要求。最后,根据原作者 ovolve 创造性的思维和建模,把环境拟人化的对弈模型,使得传统的博弈树模型能应用于此,这是面对反馈类场景的一种很好的评估决策思路。

源码和 apk

https://github.com/wylu/md2048

References

https://stackoverflow.com/questions/22342854/what-is-the-optimal-algorithm-for-the-game-2048

http://ov3y.github.io/2048-AI/

https://github.com/ov3y/2048-AI

https://en.wikipedia.org/wiki/Evaluation_function

《Artificial Intelligence : A Modern Approach》 (Third Edition) 第5章 对抗搜索

http://www.flyingmachinestudios.com/programming/minimax/

https://www.neverstopbuilding.com/blog/2013/12/13/tic-tac-toe-understanding-the-minimax-algorithm13/

http://web.cs.ucla.edu/~rosen/161/notes/alphabeta.html

https://www.cnblogs.com/mumuxinfei/p/4415352.html

http://blog.codinglabs.org/articles/2048-ai-analysis.html

http://www.cnblogs.com/mumuxinfei/p/4305981.html

http://www.cnblogs.com/mumuxinfei/p/4379595.html

http://www.cnblogs.com/mumuxinfei/p/4396339.html

http://www.cnblogs.com/mumuxinfei/p/4398680.html